A new generation of computing technology is emerging. Not from factories or cleanrooms, but from biological laboratories where human neurons are grown, trained, and wired into living machines.

These biocomputers are not futuristic prototypes. They exist now, and their backers believe they may solve some of the most pressing problems posed by the artificial intelligence boom. Unlike traditional systems that rely on silicon chips, biocomputers use clusters of lab-grown brain cells to process information.

These neurons, cultivated from human cells and connected to electrode arrays, are capable of forming networks that learn from feedback, adapt to new patterns, and interact with software systems in real time.

Two companies at the forefront of the field, one based in Australia and the other in Switzerland, are now offering access to this technology either through physical devices or remote platforms.

The Australian-developed machine, a biocomputer marketed for research purposes, uses living neural tissue integrated with hardware in what its creators describe as a “biological intelligence operating system.” It is available for purchase at a price point of around $35,000, although limited to qualified researchers.

The Swiss platform, meanwhile, provides virtual access to brain organoids, three-dimensional clusters of human neurons derived from reprogrammed adult cells. These organoids are grown in specialized labs and can be stimulated and observed remotely through a controlled interface. The platform is currently used by several universities and research teams, with the stated goal of exploring how organic networks can be trained similarly to artificial ones.

At the center of this technology is a fundamental shift in how intelligence is engineered. Rather than attempting to simulate the human brain with ever-larger neural networks running on conventional hardware, biocomputing starts with biological matter.

The result is a system that may be drastically more energy efficient than today’s leading AI models, which require massive computational resources and infrastructure.

The efficiency argument is not theoretical. The world’s most advanced supercomputers now consume enormous amounts of energy, occupy entire facilities, and require constant cooling and maintenance.

The Frontier supercomputer in the United States, for example, delivers over 1 exaFLOP of performance but requires more than 260 tons of hardware spread across dozens of cabinets. In contrast, the human brain performs roughly comparable operations using just 20 watts of energy.

Biocomputers aim to match that level of performance while avoiding the environmental costs of data centers. Proponents argue that because neurons naturally perform the kinds of parallel processing tasks that artificial intelligence systems require, they can be more efficient, more scalable, and potentially more capable in tasks involving pattern recognition, adaptation, or learning.

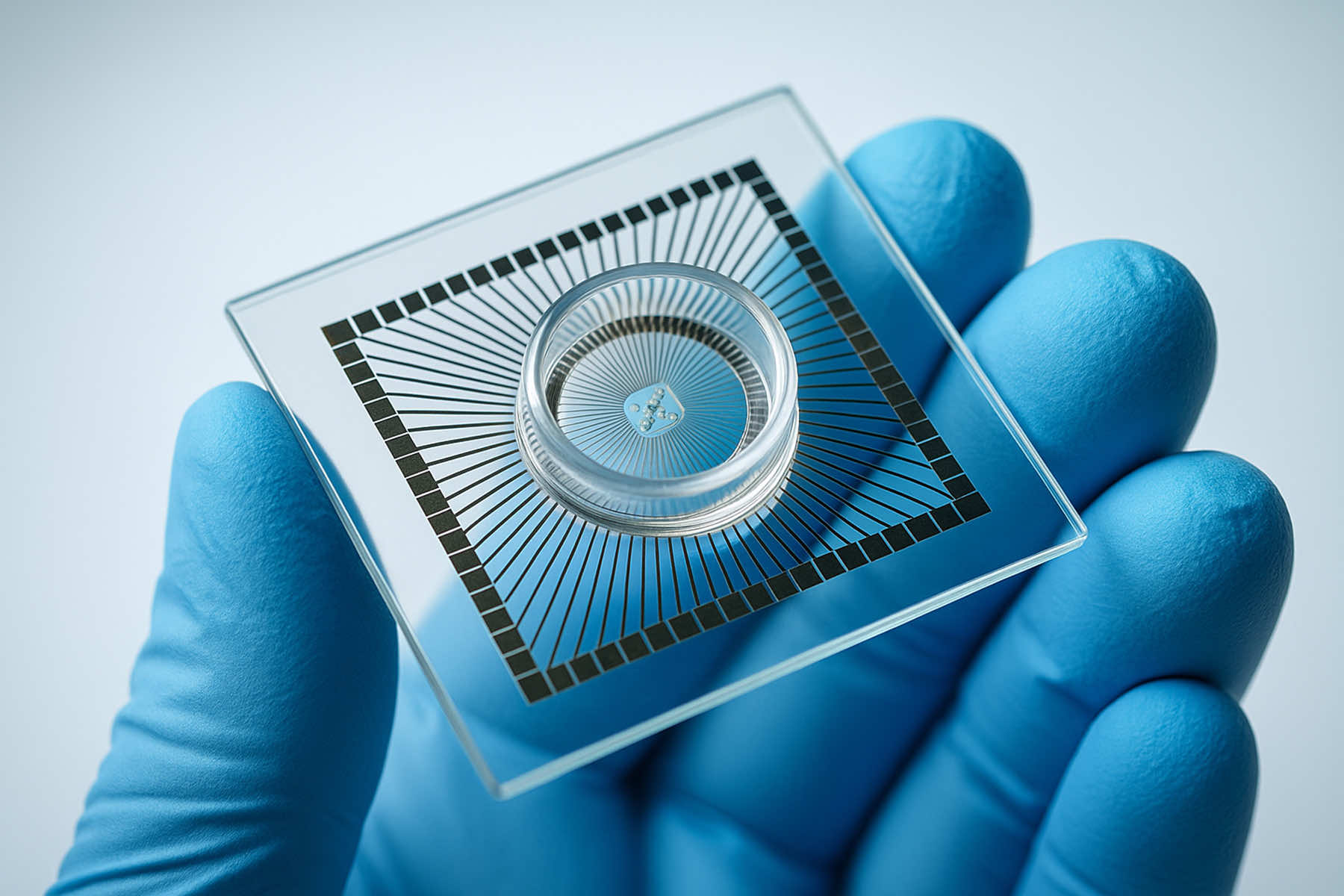

The process begins with adult human skin or blood cells, which are reprogrammed into a pluripotent state. These cells are then guided to become neural progenitors, and eventually functional neurons, through a sequence of incubation steps and gene activation protocols. Once mature, they are placed onto multi-electrode array chips, hardware that allows researchers to send signals into the neural culture and receive electrical responses in return.

The neurons begin forming connections, responding to stimuli, and developing spontaneous activity. Over time, these networks can be shaped through feedback, a process that mirrors certain aspects of machine learning.

In one early experiment conducted by the Australian company, a neural network was trained to play a simple video game by receiving different types of electrical signals based on success or failure. The more predictable the feedback, the more effectively the neurons adapted.

This form of training taps into the same principles that underlie synaptic plasticity, the brain’s ability to strengthen or weaken connections based on experience. Researchers are now exploring how these same biological mechanisms can be harnessed for computation, with a focus not on consciousness or cognition, but on utility.

What sets biocomputers apart is not just their form, but their potential function. Traditional computers are limited by rigid architecture and high power consumption. Biocomputers offer dynamic systems that may continue evolving as they operate, reshaping how tasks are performed without rewriting software.

At this stage, these machines remain confined to the lab. Their capabilities are modest, and their scale is far from the complexity of even simple animal brains. Yet their very existence marks a shift in how intelligence can be built. It begins not with code, but with living cells.

While biocomputers are still experimental, their applications are already extending beyond basic research. Academic institutions are using neuron-based systems to explore fundamental properties of learning, communication, and neural modeling. Some labs are investigating how living networks process sensory input, encode memory-like behavior, or react to changes in their environment.

These experiments are not about building sentient machines. The organoids used in biocomputing are rudimentary. They represent specific brain regions, contain drastically fewer neurons than a full brain, and lack any of the structure necessary for consciousness. Typically no larger than half a centimeter across, each organoid contains around five million cells, orders of magnitude smaller than the human brain’s more than 170 billion cells, including both neurons and glia.

Despite their limitations, organoids are proving useful in ways that conventional models are unable to replicate. Because they are grown from human cells, they offer researchers a chance to observe the effects of drugs, mutations, or stimuli on real biological tissue, without relying on animal models. In the long term, this could lead to safer clinical trials, more precise disease modeling, and new pathways for personalized medicine.

The technology also raises ethical questions. While today’s organoids do not demonstrate the complexity or awareness associated with sentient beings, some researchers and ethicists are asking how society should prepare for future developments. As neural cultures become more advanced and potentially more autonomous in their responses, the field will need to grapple with definitions of cognition, awareness, and moral boundaries.

So far, there is no indication that these systems are approaching anything resembling consciousness. The neural activity observed in lab-grown cultures remains limited, localized, and externally shaped. Researchers emphasize that even the most responsive networks are still vastly simpler than the brain of a mouse, let alone a human.

For now, the focus is on capability, not sentience. The question is whether biocomputers can perform useful tasks more efficiently than conventional systems. That includes not only games and pattern detection, but also tasks like signal filtering, real-time control of external devices, and integration into hybrid analog-digital computing platforms.

Access to biocomputing is expanding, albeit slowly. To make the field more accessible, some groups are experimenting with public-facing tools. Though limited in interactivity, such projects reflect a broader goal of bringing biocomputing out of the shadows of niche research and into a wider scientific conversation.

Still, this is a long road. Biocomputers are not ready to replace personal devices or take over general computing workloads. Their lifespan, stability, and scale remain limited, and the systems require extensive oversight and specialized care. Running a modern operating system on a dish of neurons is not technically feasible, and likely never will be.

Yet some researchers point to history. The earliest semiconductors were unreliable, bulky, and misunderstood. No one could have predicted that within a few decades they would power satellites, smartphones, and digital economies. Biocomputing, still in its infancy, may follow a different but equally transformative path.

For now, it remains a field defined by uncertainty, possibility, and deep questions. The answers are not here yet. But the machines that live, learn, and grow already are.

© Image

Cora Yalbrin (via ai@milwaukee) and Dilşad Akcaoğlu