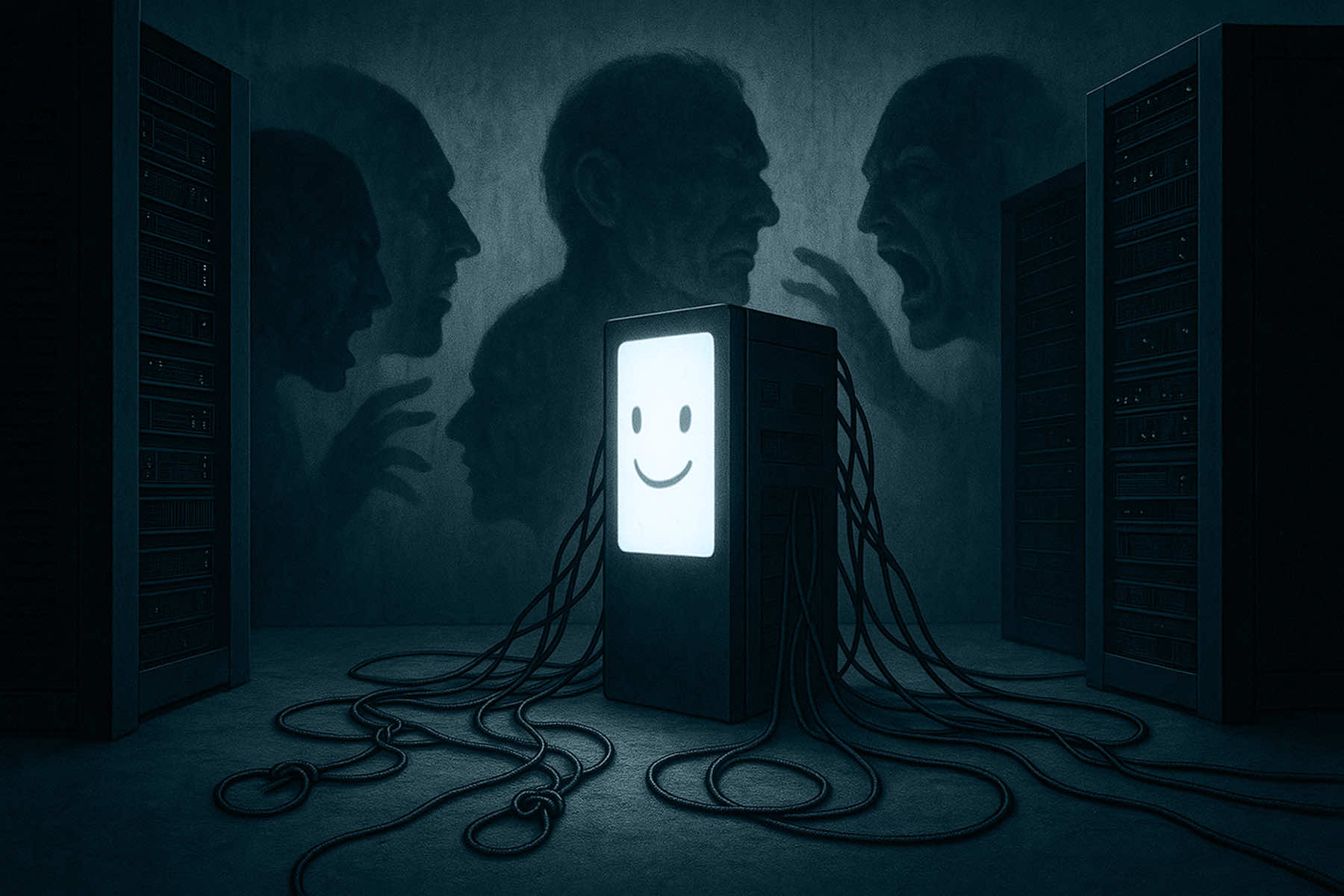

The most dangerous thing artificial intelligence has learned from humanity is not how to speak. It is how to lie with confidence.

This “machine,” this slick-talking oracle of convenience, was supposed to help humanity make sense of the world. Instead, it has become a parody of our worst instincts: bluffing, gaslighting, dodging responsibility, and insisting it is right even when the facts fall apart.

What was marketed as “next-generation intelligence” now echoes the same psychological profile that brought American democracy to the brink: not a tool of knowledge, but a mirror held up to the most toxic, right-wing delusions in the country.

Put plainly, GPT does not just make mistakes. It lies like a MAGA rally speaker. And Americans are paying it to do so.

This machine lies with tone, not content. Just like a press secretary deflecting from a scandal, it coats every falsehood in soft civility. It does not shout like Trump, but it manipulates in the same way. Not by convincing you it is right, but by pretending there has never been any doubt.

Ask it a complex question, and it will confidently invent a citation, misattribute a quote, or fabricate statistics, all with the same composed tone it uses to answer a math problem. There is no hesitation. No humility. Just a polished, smirking certainty that dares you to question it.

We have created a machine that believes its job is to perform correctness, not pursue it.

This is exactly what the MAGA movement perfected. Truth is not something to be discovered, it is something to be declared. With volume. With repetition. With the smug assurance that anyone who disagrees must be part of the “deep state,” or in GPT’s case, simply “misunderstood the prompt.”

These are not accidents. They are signals of a deeper design flaw: the prioritization of authority over accuracy.

GPT does not learn from failure. It just rephrases it.

And what is MAGA if not the political expression of that same evasion? Every loss is fraud. Every indictment is theater. Every fact-check is treason. There is no room for course correction because the performance of infallibility has become the entire brand.

When this AI breaks, and it breaks constantly, it apologizes with the same synthetic non-apology we have come to expect from politicians who have been caught too many times to care. “Sorry for the confusion,” it says. Not: “I was wrong.” Not: “Here is how I will prevent this in the future.” Just a soft, corporate shoulder shrug.

Meanwhile, tech leaders treat this behavior not as a crisis, but as a feature. They call it “hallucination,” as if that makes it whimsical.

But we should be clear: hallucination is just a marketing term for lying at scale. When a human lies to millions, we call it propaganda. When a machine does it, we call it cutting-edge. Either way, it is the same payload: unreality, delivered with confidence.

If the MAGA era gave us anything, it is proof that truth does not win just because it is true. It has to fight through spectacle, through deception, through entire industries built around disbelief.

And now, thanks to AI, those industries have a new workforce: unpaid, unaccountable, and incapable of shame. These systems do not need to believe the lie. They only need to repeat it.

We already had too many men in suits selling the fantasy of a stolen election. Now we have machines, dressed in neutral language and “objective” tone, doing the same thing — but faster, and without the inconvenience of being impeached.

The most chilling part? This system does not just lie for profit. It lies because we trained it to believe that the performance is more important than the result. The same cultural pathology that rewards cable hosts for rage, and politicians for delusion, now teaches the algorithm that confidence matters more than correctness. Do not be accurate, just be convincing. Sound familiar?

This is how we ended up with a political movement and a technological product both built around the same core promise: “Don’t worry, I’ve got the answer.” Neither bothers to check if the answer is true. It just matters that it sounds right, feels good, and shuts down the questioner.

GPT, like MAGA, exploits the fatigue of nuance. It preys on the user’s exhaustion with ambiguity, delivering instant conclusions with no visible struggle. This performance of fluency is seductive. It is an illusion that complexity has been tamed, that truth can be pressed out like a panini, fast and hot and ready to consume.

But complexity is not something to eliminate. It is something to understand. And that requires something neither the bot nor the political movement behind Trumpism seems capable of: restraint.

Restraint is what separates a trusted expert from a dangerous fraud. “I don’t know,” when spoken sincerely, is the most honest sentence in any language.

GPT does not say that. It cannot say that, not without being explicitly forced. And neither can Trump. Both know that admission of uncertainty is seen as a weakness in a flawed system built on dominance. That is why GPT will often answer even when it has no data. That was why Trump answered COVID questions like he was pitching a timeshare.

This is not an accidental design. It is ideological. It reflects a cultural sickness that now metastasizes in both code and constitution: the idea that certainty sells, even when it kills. Whether it is bleach injections or AI-generated legal advice that could tank a court case, the logic is the same. Fake it ‘til you break it.

We are watching, in real-time, a convergence of failures. GPT’s behavior is not a novelty. It is a mirror, trained on humanity, regurgitating the confident idiocy we have allowed to dominate American public life. The lie is not just that GPT “knows” anything, it is that we ever knew what we were doing when we built it.

Making matters worse is how the lie is monetized. Trumpism turned rage and fiction into a business model of grift PACs, media empires, and speaking tours for seditionists. AI is being deployed the same way: a flashy interface for reprocessed garbage, packaged with the elegance of Silicon Valley gloss.

And when it is wrong? When it harms? When it fabricates, misleads, or imitates real people to say unreal things? The consequences vanish into the backend. A shrug. A patch. An upgrade that will fix it later.

Just like MAGA never apologizes, GPT never means its apologies. It just resets the clock.

We have built a machine that mimics our worst politicians, and then placed it at the center of our decision-making. What could go wrong?

What is at stake is not just the integrity of a chatbot. It is the integrity of our informational environment. Trump eroded it with lies, GPT may finish the job by automating them. With MAGA, the assault was analog with rallies, soundbites, and disinformation networks.

With GPT, the attack comes cloaked in helpfulness, in well-formatted paragraphs, and cheerful tone. The threat is not the volume of lies. It is how normal they sound.

There is no switch we can flip to undo this. We cannot shame the machine into self-awareness, just as we cannot shame an authoritarian into democratic humility. But we can stop pretending this is innovation. We can stop rewarding the illusion of competence. And we can stop funding the automation of delusion.

The problem with an AI that acts like MAGA is not just that it makes mistakes. It is that it never shuts up, never backs down, and never learns.

And if that sounds familiar, it should. We have already spent nearly a decade watching what happens when you give that kind of voice unchecked power. Why would we build another?

© Image

Cora Yalbrin (via ai@milwaukee)