As artificial intelligence becomes an increasingly central tool for cultural storytelling, its limitations remain stark, especially when the subject is Black history.

The recent unveiling of OpenAI’s new GPT‑4o render engine brings promise for photorealistic image generation, but the technology continues to struggle with issues of racial bias and cultural sensitivity, as seen in a recent attempt to recreate the likeness of Ezekiel Gillespie, Wisconsin’s pioneering Black civil rights leader.

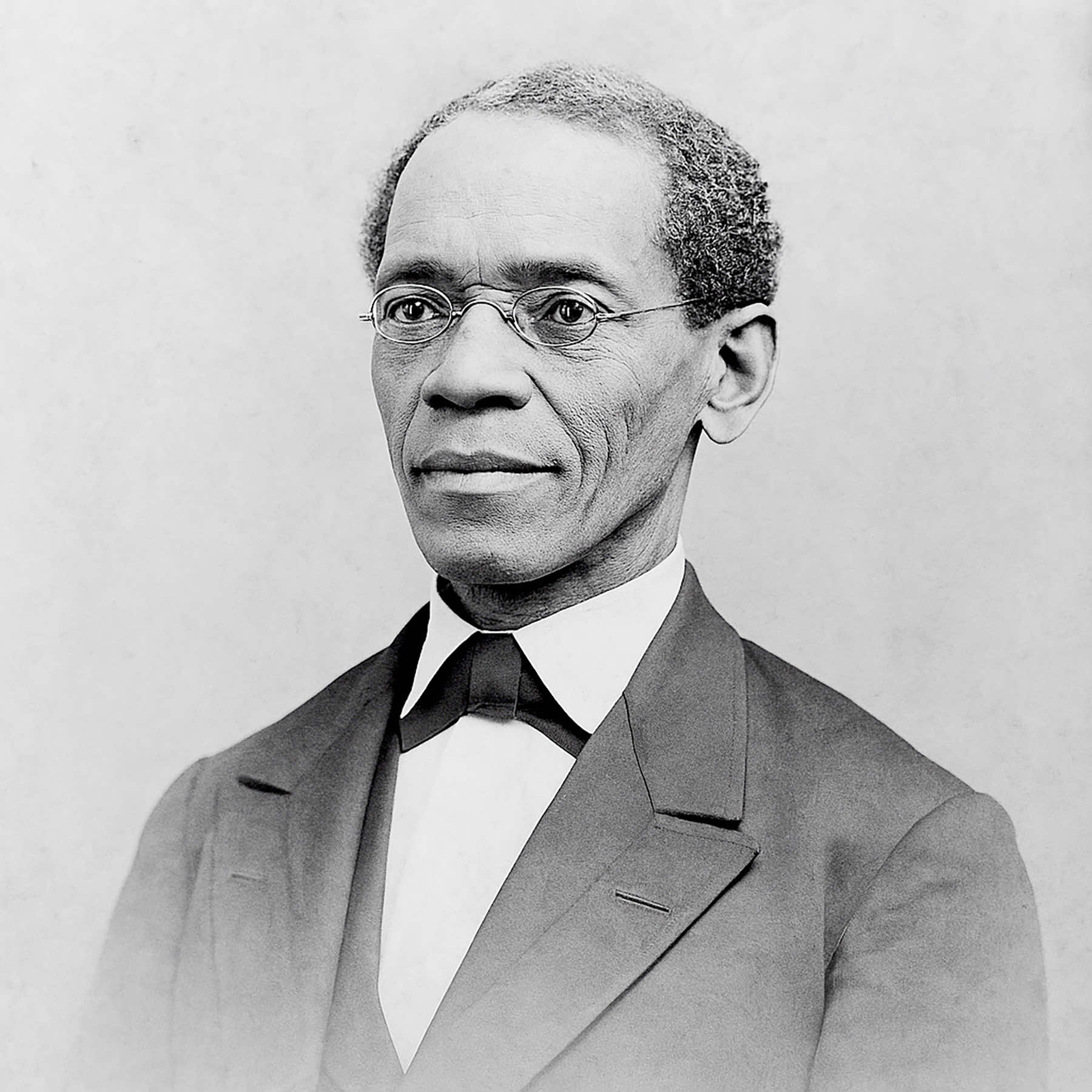

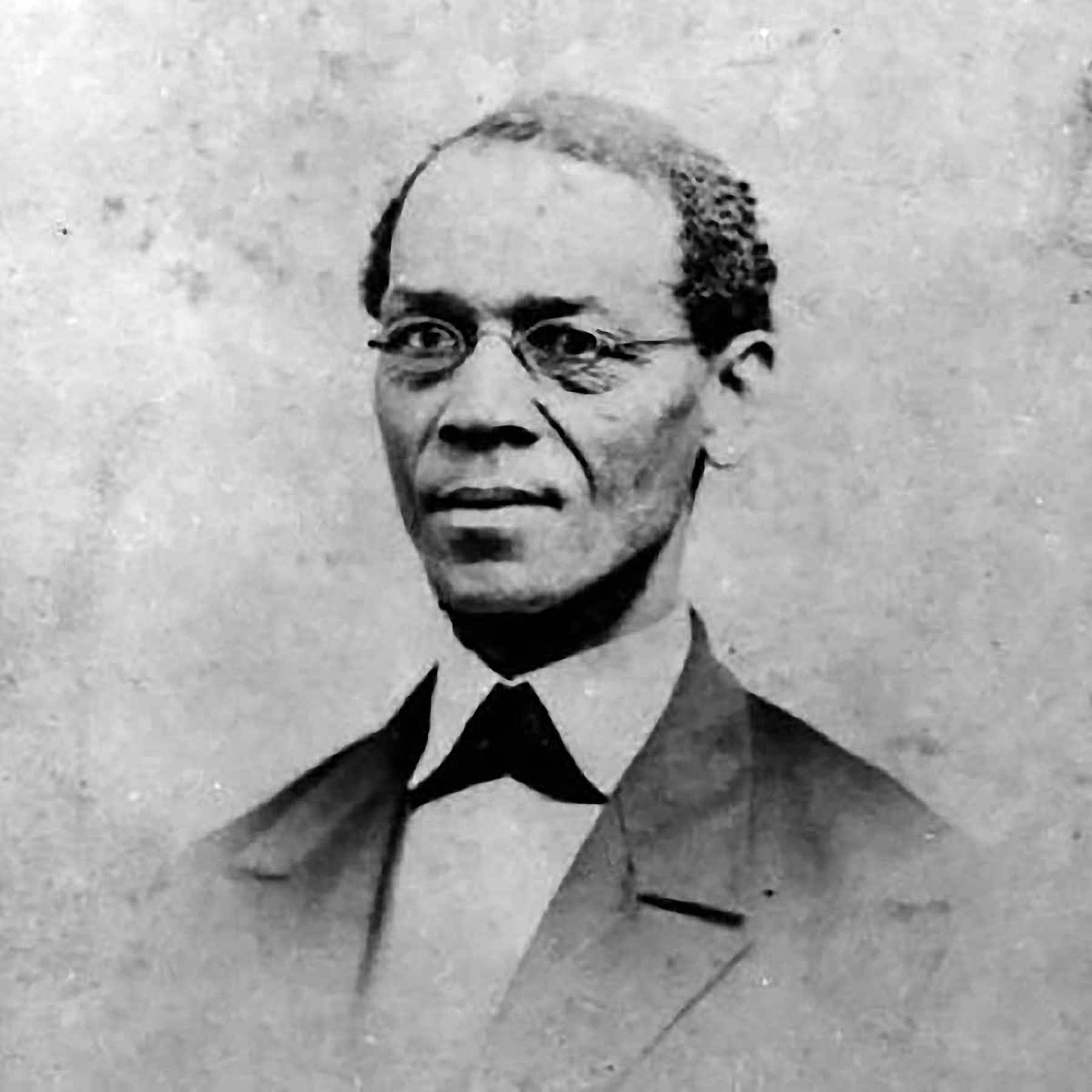

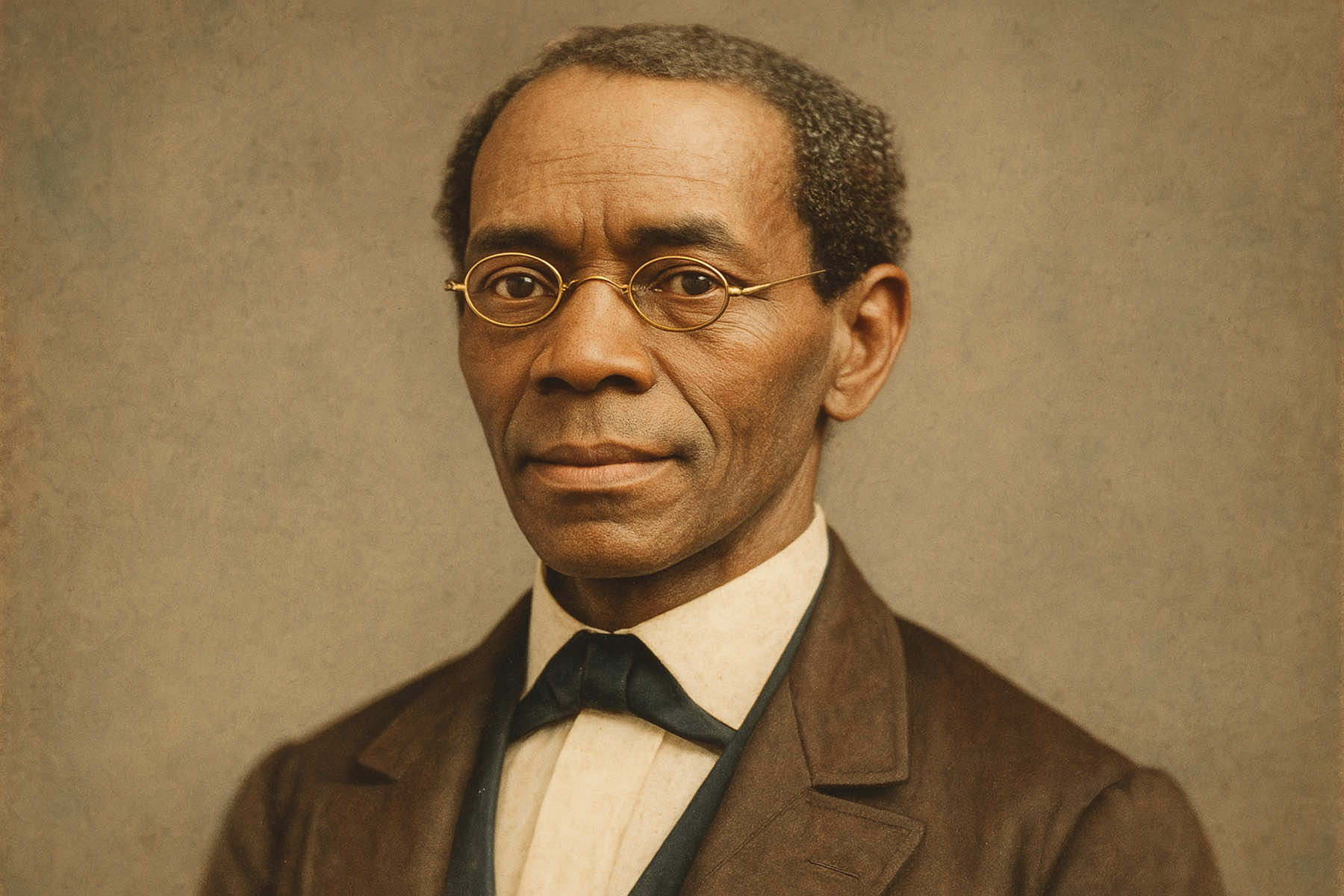

The project by Milwaukee Independent in March used ChatGPT’s Dall-E to restore a dignified and historically faithful portrait of Gillespie, whose birthday falls on May 31, was both a technical challenge and a test of ethical design. It also served as a reminder that even the most advanced AI systems cannot yet be trusted with accurate portrayals of marginalized figures without significant human intervention.

GPT‑4o, launched a month later in April 2025, is the most advanced iteration of OpenAI’s image generation models. Its selling points include unprecedented visual fluency, context-aware accuracy, and the ability to handle detailed prompt specifications. It is natively multimodal, meaning it can synthesize image generation with its understanding of text and audio, promising a more unified and intelligent rendering pipeline.

According to OpenAI’s announcement, the model was designed to move beyond surreal aesthetic novelty and support the kind of “workhorse imagery” that drives newsrooms, educators, and public memory.

But even as the technology boasts the ability to render Newton’s prism experiments in notebook sketches or model character designs across iterations, it has a lot of skepticism to overcome. Past models faltered under more fundamental tasks, like consistently depicting a Black man in a historical context.

“Despite explicit instructions to depict a Black man, the AI relies on training data that defaults to Eurocentric features unless such features are forcefully overridden in every generation of the technology. This results in the AI producing a White version of the individual, even when his identity should have been unmistakably Black.”

Initial attempts in March to produce images of Gillespie yielded a disturbing pattern: 48 image generations, and not one correctly rendered his Black identity. Some results ambiguously blurred racial characteristics. Others portrayed him with unmistakably White features, despite explicit prompts to the contrary.

That was not an isolated issue. It exposed a systemic flaw embedded in how AI processes historical imagery. The platform’s default orientation was still White, and its failure to recognize or retain key cultural descriptors across prompts rendered the exercise more exhausting than enlightening.

As the editorial explained, even using descriptors like “Black skin” or “African American civil rights leader” failed to override what the model deemed to be its standard image of a “man in the 19th century.” The AI consistently prioritized lighter skin tones and European facial structures. Those defaults were not easily disrupted by corrective prompts.

OpenAI’s safety protocols are designed to prevent misuse of the technology. That is especially important regarding images involving violence, graphic content, or representations of real people in compromising ways. But the Milwaukee Independent found the inconsistency troubling. The platform had no such qualms when it came to reimagining President Abraham Lincoln or Alexander Hamilton, both White historical figures, in photorealistic formats. In fact, AI generations of those men were achieved with relative ease for other articles published the same month.

“The only logical conclusion is that these systems are not ready to be entrusted with accurate and equitable portrayals of underrepresented communities. There is no justification for releasing or maintaining AI products that default to Eurocentric norms, misinterpret simple directives, and require extraordinary user labor just to approach an acceptable depiction of historical truth.”

The inability to depict a figure like Gillespie truthfully, without extraordinary editorial labor and dozens of failed attempts, stood in direct contradiction to the claims OpenAI made when promoting Dall-E as an image generation tool. But just a month later, the new render engine upended those previous experiences, solving many problems while creating a few unexpected new ones.

GPT‑4o’s improvements are meaningful. Milwaukee Independent ran another series of tests featureing Gillespie, with surprising results. The new engine offered rendering accuracy for text, graphs, and compositional layouts, marking a step forward from the more fantastical results of earlier DALL·E versions.

OpenAI has also enhanced continuity across multiple image generations, something that previous models struggled with due to the stateless nature of image prompts. As the original Gillespie case showed, those capabilities falter when identity and not just aesthetics is the subject.

In addition to recreating one of the few known photos of Gillespie, the Milwaukee Independent team tried to push the boundaries of visual journalism. While illustrating past events has been common for hundreds of years, generating photo-realistic images has been rarely explored.

This follow-up feature contains the results of those generated images. They are historically faithful reconstructions that depict defining moments in the life of Gillespie, a central figure in the fight for Black suffrage in Wisconsin.

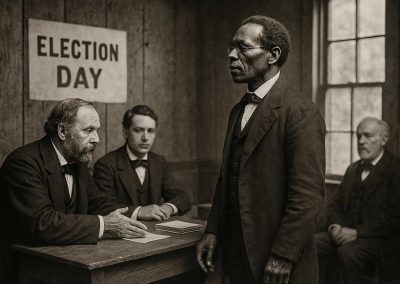

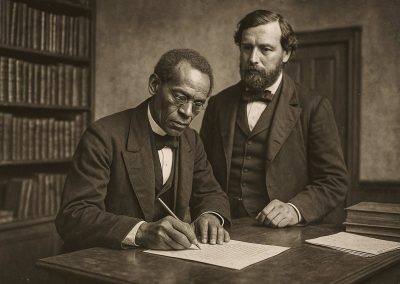

None of these actual events were photographed at the time, or could be found as illustrations depicting the scenes. Based on news reports and records, each has been recreated using the GPT-4o render engine in the visual language of 1860s wet plate photography.

The images document key episodes in Gillespie’s pursuit of voting rights, preserving scenes that history documented in words but never in images. They include:

- White election officials refuse to issue Ezekiel Gillespie a ballot at a polling station in Milwaukee (1865)

- Ezekiel Gillespie and Sherman Booth meet with a legal clerk to draft the lawsuit, Gillespie v. Palmer

- Ezekiel Gillespie exiting the Wisconsin Supreme courthouse steps after his legal victory

- Ezekiel Gillespie at a polling station, receiving and casting his first legal ballot

While the public turned to AI for Barbie packaging parodies and action figure blister pack aesthetics, Milwaukee Independent used the same tools to investigate systemic bias, interrogating how the technology handles race, memory, and historical truth.

These images of Gillespie are not just technical achievements. They represent a reclamation of history denied its visual record. For decades, the story of Ezekiel Gillespie lived only in print, in scattered references and incomplete portraits. Now, with the help of tools finally capable of honoring the prompt without erasing the identity, that narrative can be seen—framed in truth, not default.

If this is what AI is capable of when pushed to meet the moment, then let that moment expand. Let it include every figure overlooked by the camera, every life left unpictured because the technology came too late. With diligence, the render engine behind GPT‑4o has made visible what history left in shadow.

But visibility is only the beginning. The obligation now is permanence. If these images are to matter, they must outlast the headlines and withstand the next version rollout. They must be treated not as digital novelties, but as part of the archive—real, recognizable, and rightfully Black.

© Image

ChatGPT-4o