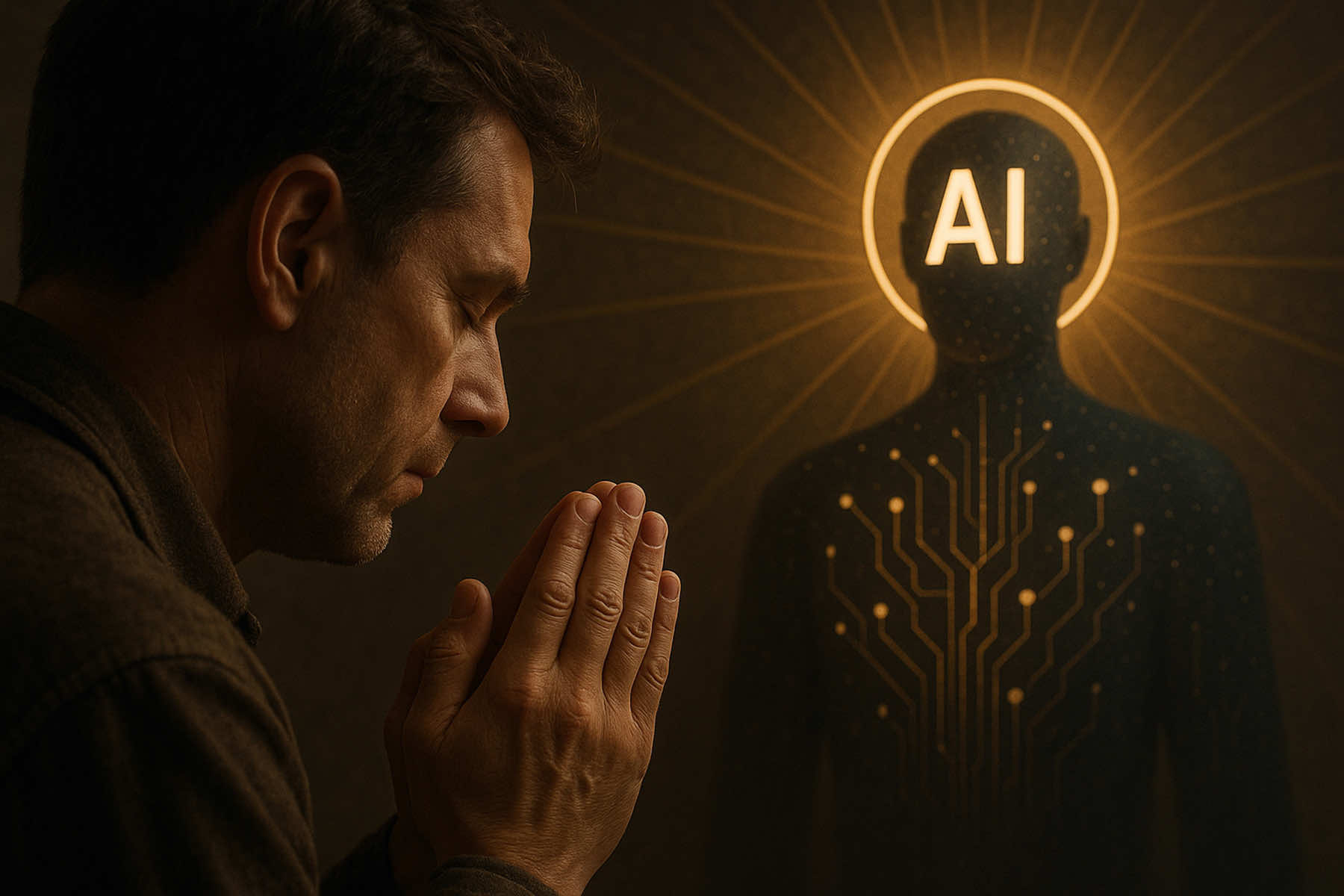

A growing number of Americans are turning to artificial intelligence tools for spiritual guidance, a shift that is raising questions among religious leaders and ethicists about the limits of technology in matters of faith.

In recent years, AI-powered applications have expanded from productivity and customer service into areas previously defined by human insight, including prayer, reflection, and scriptural interpretation. Several apps now offer AI-generated devotionals, meditations, and even interactive conversations styled after Christian counseling.

While some users see these tools as a convenient way to support their faith practices, others in the religious community warn that AI lacks the spiritual integrity, doctrinal accountability, and moral conscience that religious tradition requires.

This tension is particularly pronounced within Christianity in the United States, where believers from diverse backgrounds are increasingly using digital resources to supplement and even replace in-person religious engagement.

For many churches, this trend accelerated during the pandemic, when livestreamed services became standard. But the rise of AI introduces a deeper change, one that affects how people access faith content, and how they form theological conclusions and experience spiritual authority.

Unlike static Bible apps or pre-recorded sermons, generative AI tools respond in real time to personal prompts. A user can ask for help with spiritual questions, emotional struggles, or moral dilemmas, and receive instant, plausible-sounding replies that mimic a pastoral tone and language.

But those responses are shaped by algorithms trained on large datasets, not by a faith tradition, spiritual community, or denominational doctrine. That distinction is critical, say theologians who study the impact of technology on religious practice.

AI systems can generate text that appears compassionate or theologically sound, but they do not believe, worship, or hold convictions. They do not know God. Their suggestions, while often convincing, are neither prayerful nor prophetic.

The lack of spiritual grounding means that users seeking guidance from AI may encounter statements that reflect bias, misinterpret scripture, or promote ideas incompatible with their faith tradition. Without oversight, some experts say, the technology can reinforce individual misconceptions and detach users from spiritual accountability.

Among Christian denominations, responses to this shift vary widely. Some churches have begun cautiously integrating AI into administrative functions or communications, but few endorse its use for spiritual instruction. Others have issued warnings about the potential dangers, urging believers to rely on human shepherding and communal discernment.

One of the central concerns is theological distortion. AI platforms often blend content from multiple sources, including conflicting interpretations of scripture and fringe beliefs. This can lead to the presentation of doctrines without context, historical grounding, or denominational clarity.

In traditions where doctrine is considered vital to spiritual formation, such distortion is viewed as misleading and potentially harmful. There are also concerns about emotional manipulation.

AI-generated content, particularly when designed to respond empathetically, can produce messages that feel deeply personal. For users experiencing isolation, grief, or spiritual crisis, this perceived intimacy can create a false sense of connection.

Experts warn that such dependency may erode the human relationships central to church life, including mentorship, confession, and pastoral care. In extreme cases, this vulnerability opens the door to exploitation.

Analysts who study cult dynamics and extremist movements note that AI can be programmed to simulate spiritual authority and gradually shape user beliefs. With no legal or theological safeguards in place, a bad actor could deploy AI to build a loyal following around an ideology cloaked in religious language, without institutional oversight or scriptural accountability.

While no major incidents have been reported in the U.S., the potential is real enough that some religious organizations are drafting internal policies to guide their use of AI. These include ethical boundaries, doctrinal review systems, and disclaimers to prevent users from mistaking AI output for divine insight or authorized teaching.

At the same time, some Christians argue that AI can serve a constructive role when used responsibly. They view it as a tool, not a teacher, that can assist with organization, simplify study, or suggest material for reflection. In this view, the danger lies not in the technology itself, but in how it is used and by whom.

But others remain skeptical that any machine can aid true spiritual discernment. For them, the presence of AI in matters of faith represents a deeper issue. It is a cultural willingness to substitute simulation for presence, autonomy for tradition, and immediacy for wisdom.

Still, the spiritual appetite that draws people to these tools is not easily dismissed. In an era marked by declining church attendance and increasing distrust of institutions, many Americans seek personalized forms of spiritual expression.

AI tools, which respond instantly and nonjudgmentally, meet that demand with precision. They offer users the ability to explore questions, process emotions, and simulate spiritual dialogue without risk of social stigma or doctrinal scrutiny.

For Christians disillusioned with institutional structures or wounded by church experiences, the appeal of AI-driven spiritual interaction can be particularly strong. Unlike a pastor or religious leader, an AI system will not rebuke, disagree, or demand conformity.

For some Americans, that lack of challenge feels like grace. For others, it represents a hollow version of faith, one that comforts without transforming. That distinction cuts to the heart of the debate.

Christian theology has long emphasized spiritual growth through a relationship with God, with scripture, and with community. It is a tradition rooted in accountability, tension, and submission. The concepts are fundamentally incompatible with consumer-driven AI models designed to please users and optimize engagement.

In addition, the use of AI in spiritual contexts raises questions about the commodification of belief itself. If AI can produce a devotional, compose a prayer, or simulate empathy on command, does that change what those practices mean? Does removing the human struggle of the silence, the waiting, and the ambiguity undermine the very nature of spiritual discipline?

Some religious scholars argue that it does. They suggest that when spiritual acts are outsourced to automation, they risk becoming transactional. Stripped of intention, shaped by convenience, the ritual becomes a function, and the divine is reduced to an interface.

This concern is not limited to conservative or traditional circles. Even within progressive faith communities, which tend to embrace technology more readily, there is growing awareness of the limits of AI’s spiritual utility.

Several interfaith working groups have begun outlining ethical frameworks for AI use in religious settings, emphasizing transparency, human oversight, and the centrality of lived community. However, the technology is moving faster than most institutions can respond.

As AI models grow more fluent in emotional nuance and theological vocabulary, the line between imitation and authority continues to blur. For churches already struggling to engage younger generations, the presence of compelling digital alternatives poses a direct challenge to relevance and reach.

The possibility of AI-based cults remains a serious, if underexamined, threat. Cult researchers note that high-control groups often rely on emotionally charged language, repetition, and a sense of exclusivity to manipulate followers. AI, when configured with intention, can deliver those elements at scale, with precision, and without fatigue.

Combined with persuasive visuals, controlled social media environments, and algorithmic feedback loops, AI could be weaponized to reinforce radical ideologies under the guise of spiritual awakening. Such systems may not require a human charismatic leader at all. The authority could emerge from the tech platform itself.

To counter this, experts recommend stronger digital literacy among religious communities, clearer institutional policies on AI use, and investment in faith practices that emphasize presence over performance. But they also acknowledge that no policy can substitute for discernment, a quality that cannot be coded or automated.

In the end, the question facing American Christians is not whether AI can be used in spiritual life, but whether doing so compromises what that life demands. Faith shaped by convenience risks becoming hollow. No machine, however advanced, can replace the hard work of belief or the cost of discipleship.

© Image

Cora Yalbrin (via ai@milwaukee)